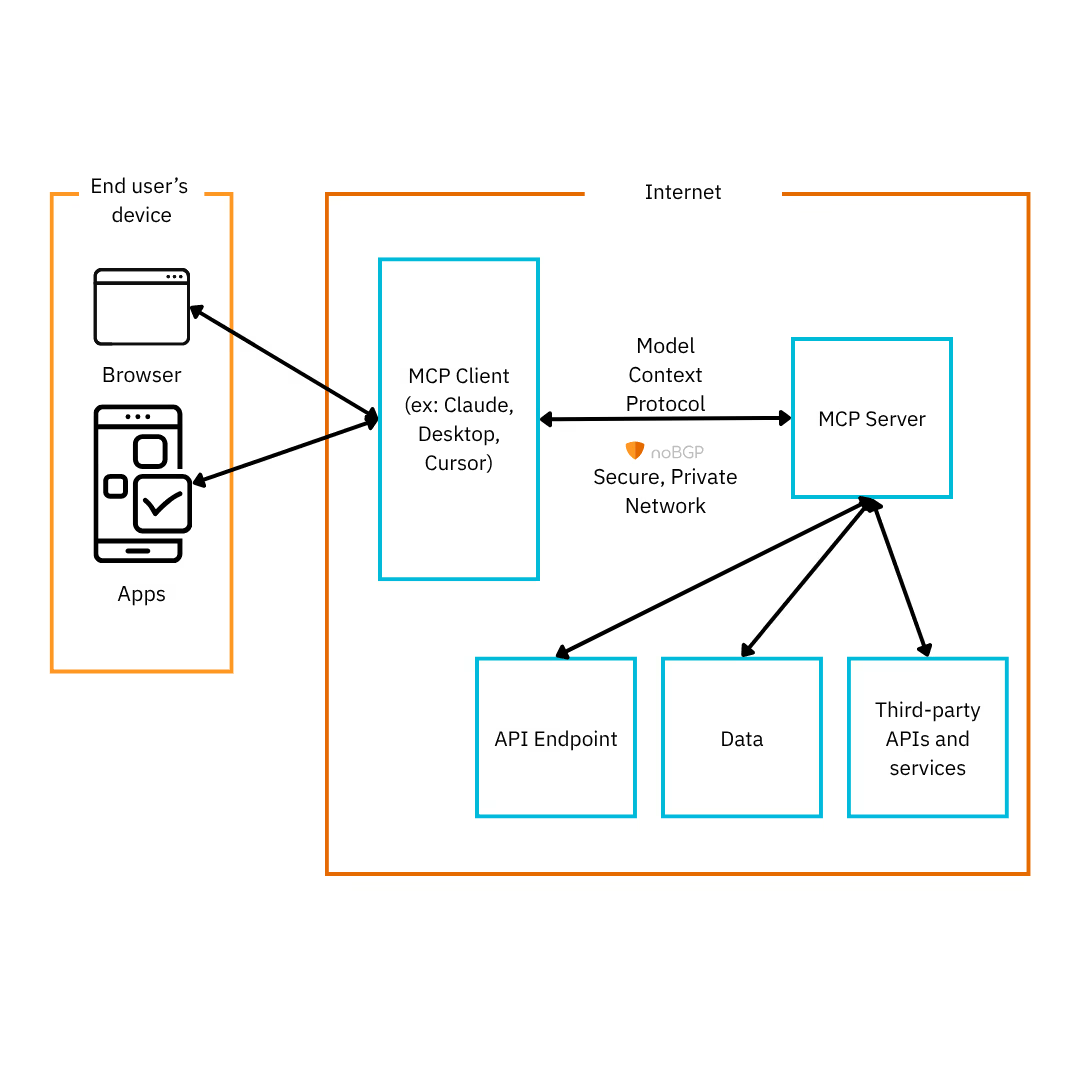

As AI adoption accelerates, so do the complexities of running large-scale inference and retrieval workflows across distributed compute environments. Model Context Protocol (MCP) is a new architectural approach designed to standardize how AI workloads connect, share context, and retrieve data across cloud-native infrastructure.

More than just an AI protocol, MCP introduces a networking layer for model-to-model, model-to-database, and model-to-human interactions—with a strong emphasis on interoperability, statelessness, and performance.

This article explores how MCP works, what it does, and how it fits into the broader AI ecosystem, especially for teams building real-time applications, multi-agent systems, and hybrid AI deployments.

At its core, Model Context Protocol (MCP) is a lightweight protocol designed to coordinate context-aware communication between AI models, tools, and data sources. It defines a way to exchange queries, responses, metadata, and references across a distributed system—often in real-time.

MCP acts as a connectivity layer that abstracts how different components in an AI system interact. For example:

Unlike traditional REST APIs or RPC systems, MCP is optimized for AI context orchestration, allowing large models to connect with other services dynamically.

MCP defines a structured message format that includes:

These messages are transmitted over modern networking transport protocols—often using WebSockets, HTTP/2, or QUIC for low-latency delivery.

Once the request is received, the MCP server routes the request to the appropriate destination:

Because it’s designed for multi-agent communication, MCP supports asynchronous, stateless connections across different compute zones or clouds.

From a connectivity perspective, MCP behaves like a stateless pub-sub or router model rather than maintaining persistent sessions:

This enables MCP to work in hybrid environments where the requester and responder are in different VPCs, clouds, or even physical locations—ideal for distributed inference or memory augmentation architectures.

Yes. MCP servers are stateless by design.

While they can log traffic or cache certain results, the protocol assumes no persistent session state between requests. This ensures scalability and fault tolerance—if one MCP server goes down, another can instantly pick up traffic.

This design also aligns with modern cloud practices like:

Each MCP transaction is atomic and idempotent, ensuring that models and tools can be coordinated without maintaining per-user or per-session state.

MCP is a protocol, not a product. Whether an MCP server is free depends on the implementation.

In experimental or community settings, many developers run their own MCP server infrastructure using open-source libraries or containers.

MCP does not replace RAG (Retrieval-Augmented Generation) directly, but it can enhance or orchestrate RAG workflows.

In a typical RAG setup:

MCP provides a flexible framework to coordinate these steps. Instead of hardcoding the retrieval and generation logic, you can:

This makes MCP especially useful for multi-agent RAG, domain-specific orchestration, or retrieval across multiple knowledge domains.

Yes, MCP can work with OpenAI and other API-accessible models.

In fact, many early MCP deployments use OpenAI as the LLM backend. The MCP server acts as a smart router or interface, which:

Because MCP is model-agnostic, it can route requests to:

This makes it an ideal framework for building AI stacks that mix public APIs with private intelligence.

MCP (Model Context Protocol) is a powerful enabler for the next generation of AI systems—especially those operating across hybrid cloud, edge compute, and multi-agent designs. It’s not just about inference; it’s about intelligent, context-aware routing of tasks between models, tools, memory, and users.

By focusing on statelessness, network flexibility, and context-first design, MCP empowers developers to build scalable, modular, and interoperable AI systems that can plug into any model, anywhere.

Whether you’re building a custom RAG system, deploying agents across clouds, or routing LLM queries with precision, MCP is the protocol to watch.